Google’s AI Mode now prioritizes visual content and real-time camera interactions over traditional text-based results. This shift changes how websites need to structure their visual elements for maximum search visibility.

We at Emplibot see businesses struggling to optimize for Google AI Mode visual and live search features. Most content creators still focus on text optimization while missing the growing importance of image recognition and visual search patterns.

This guide provides actionable steps to prepare your visual content for AI-powered search results.

Contents

ToggleHow Google AI Mode Processes Visual Content

Google AI Mode processes visual content through three distinct pathways that fundamentally change how search engines understand and rank images. The system analyzes pixel patterns, contextual placement, and real-time camera feeds to generate comprehensive search results that combine visual recognition with traditional text matching.

Real-Time Camera Integration Changes Search Behavior

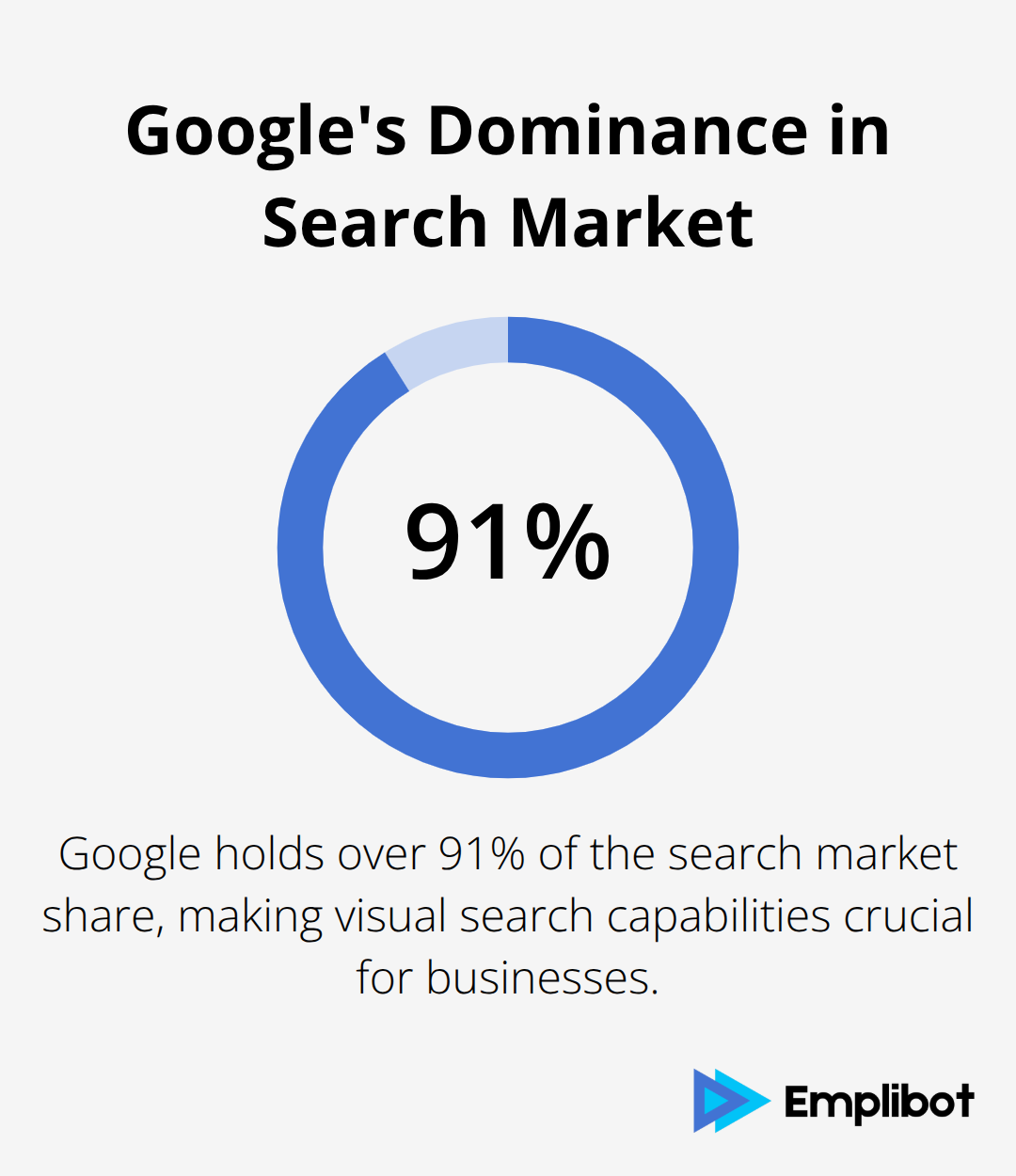

Google Lens integration within AI Mode allows users to point their cameras at objects and receive instant search results with product information, pricing, and related content. This integration leverages Google’s dominant position in the search market, where the company maintains over 91% market share, making visual search capabilities increasingly important for businesses.

The system now recognizes over 1 billion visual entities and can identify products, landmarks, text, and even solve mathematical equations through camera input. Users frequently combine camera searches with follow-up text queries, which creates multi-modal search sessions that require websites to optimize both visual and textual content simultaneously.

Recognition Technology Demands Structured Visual Data

AI Mode’s recognition technology relies on computer vision models trained on billions of images. Advanced models have been trained on three billion images and achieved 90.45% top-1 accuracy on ImageNet, setting new performance standards. This requires websites to structure their visual content with precise metadata and contextual information.

The system analyzes image composition, color schemes, object placement, and text that surrounds images to determine relevance and ranking. Images with clear focal points, high contrast, and minimal background noise receive higher visibility scores in AI-generated responses. Visual content that appears in AI Mode results typically features descriptive filenames, comprehensive alt text, and strategic placement near relevant textual content that reinforces the visual message.

Visual Search Patterns Drive Content Strategy

AI Mode prioritizes images that match specific visual search patterns users employ most frequently. The system favors images with consistent lighting (natural or well-balanced artificial), clear subject matter, and minimal visual clutter that might confuse recognition algorithms.

Content creators must now structure their visual assets around these recognition patterns rather than traditional aesthetic preferences. This shift requires a strategic approach to visual content optimization that considers both human viewers and AI interpretation capabilities, particularly when creating visually appealing infographics that can effectively communicate data and statistics.

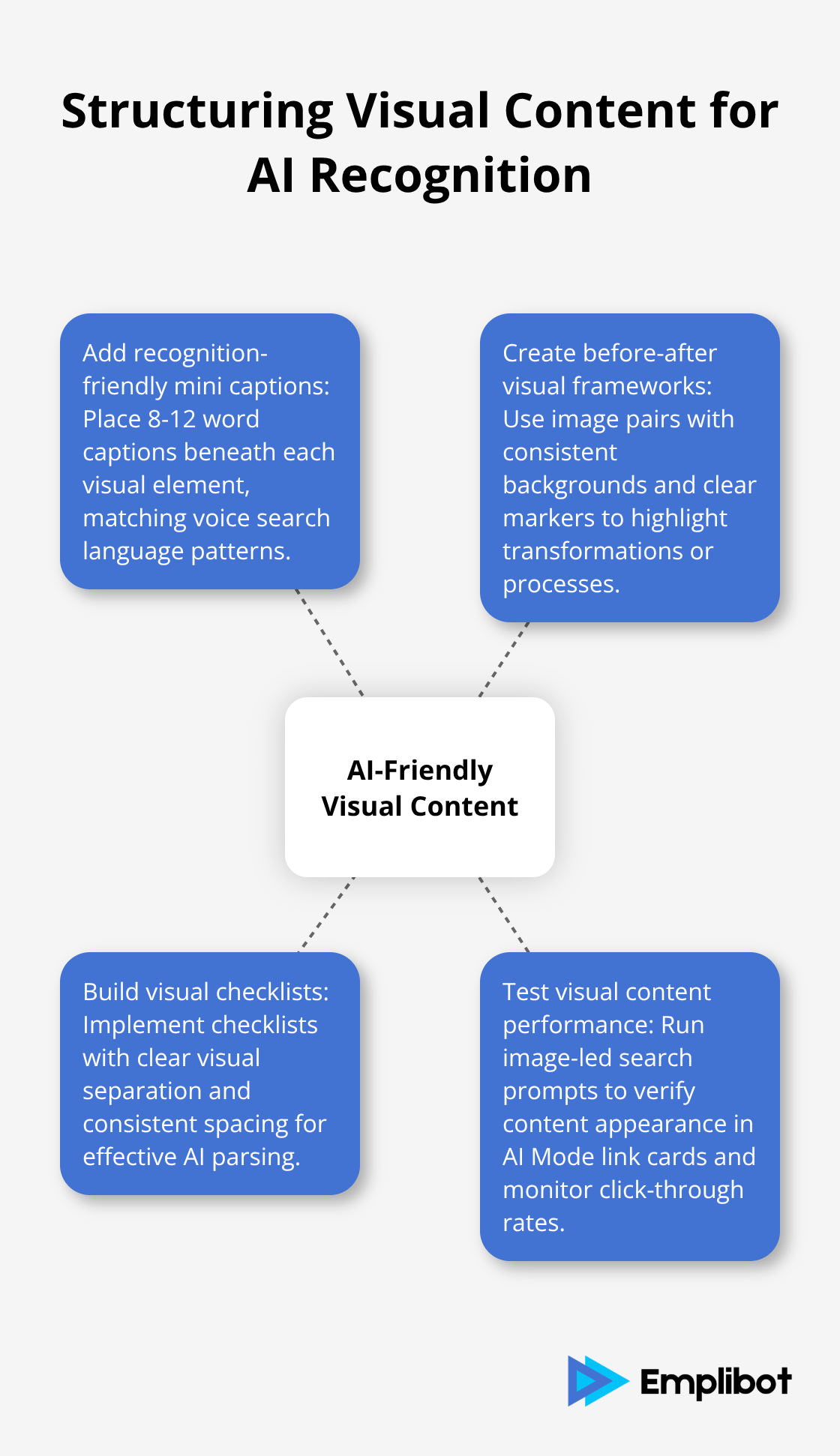

How to Structure Visual Content for AI Recognition

Google AI Mode demands specific visual content structures that traditional SEO practices ignore completely. Images must include mini captions positioned directly beneath each visual element, written in 8-12 words that describe the exact action or object shown. These captions should match the language patterns users employ during voice searches (which account for 20.5% of people worldwide according to recent data). Position step-by-step instruction lists within 150 pixels of related images to create visual-textual clusters that AI Mode interprets as cohesive content blocks.

Add Recognition-Friendly Mini Captions

Place fact boxes with numerical data, percentages, or key statistics adjacent to visual elements rather than scattered throughout the text. AI Mode scans these caption-image pairs to understand context and relevance. Write captions that mirror natural speech patterns users employ when they describe images aloud. Test caption effectiveness by reading them without viewing the image – they should convey the visual message independently.

Create Before-After Visual Frameworks

Before-and-after image pairs generate enhanced engagement rates in visual search results compared to single images, particularly for how-to content and tutorial materials. Structure these pairs with identical backgrounds, consistent conditions, and clear visual markers that highlight the transformation or process completion. Place concise step indicators between frame sequences with numbered overlays or directional arrows that guide both human users and AI recognition systems through the content flow.

Build Visual Checklists for Multi-Platform Use

Visual checklists with checkboxes, progress bars, or completion indicators can be automatically repurposed across LinkedIn and Facebook platforms while they maintain consistent visual branding elements. These structured visual elements help AI Mode understand content hierarchy and user intent patterns. Format checklists with clear visual separation between items and consistent spacing that AI recognition systems can parse effectively.

Test Visual Content Performance

Run image-led search prompts with specific product names, processes, or visual descriptions to verify whether your content appears in AI Mode link cards. Test queries like “product name plus visual action” or “location plus visual identifier” to track search visibility. Monitor click-through rates from visual search results, which show improved performance when images include proper structured data markup and contextual captions that match user search intent patterns.

These optimization techniques prepare your visual content for AI Mode recognition, but success requires systematic testing to measure actual performance improvements.

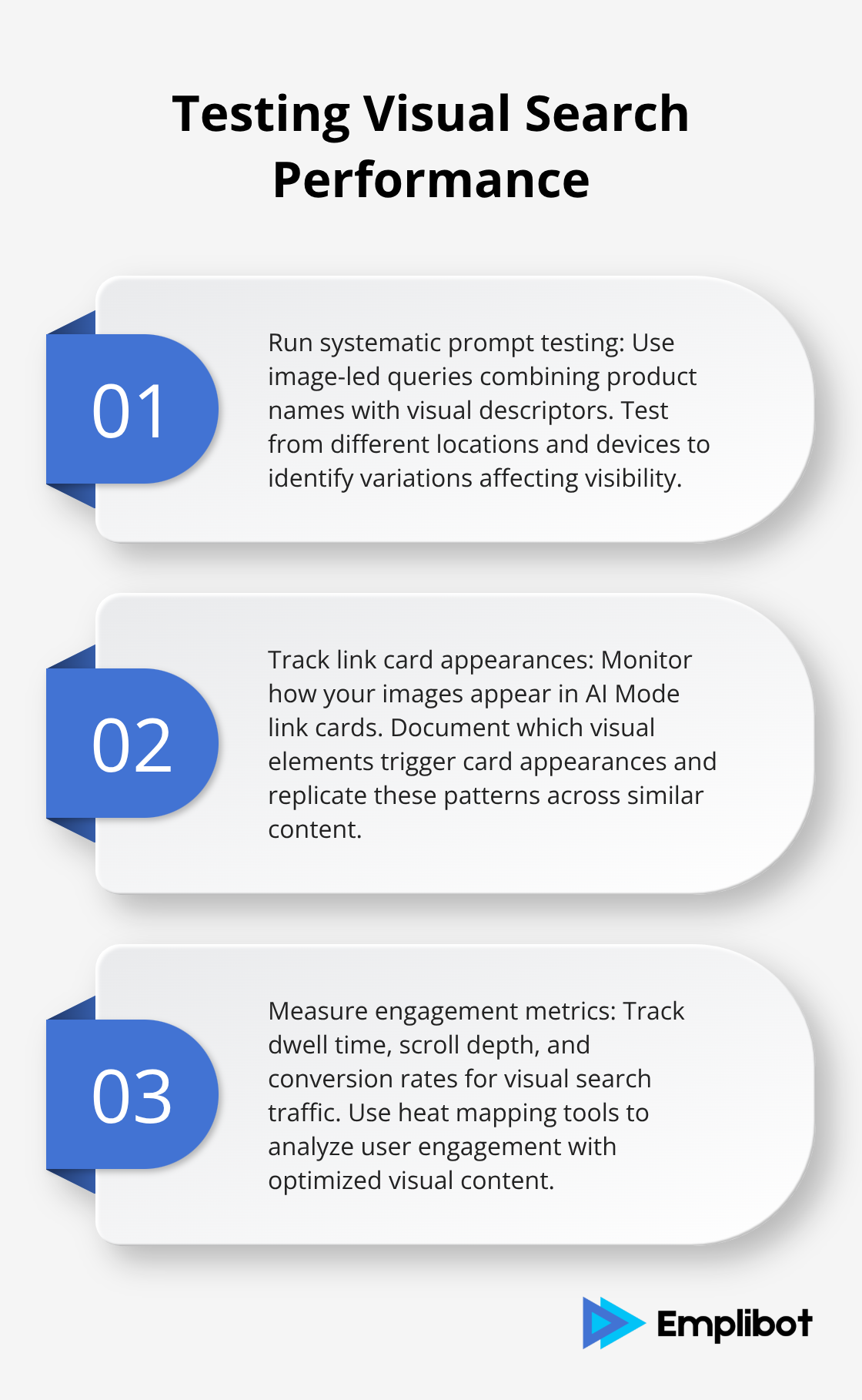

How Do You Test Visual Search Performance

Testing visual search performance requires systematic prompt testing that simulates real user behavior patterns. Start with image-led queries that combine product names with visual descriptors like red running shoes with white soles or wooden coffee table with metal legs. Google processes approximately 8.5 billion searches daily, with mobile searches accounting for over 60% of all queries in the U.S. Run these tests from different geographic locations and device types to identify variations that affect your visual content visibility.

Track Link Card Appearances Across Search Results

Monitor how your images appear in AI Mode link cards by searching for branded terms combined with visual keywords. Top SERP positions generate 39.8% CTR rates, which makes their tracking essential for optimization success. Check whether your images appear as primary visuals in these cards or as secondary content. Images that appear as primary visuals in link cards typically receive 3x more traffic than those positioned as secondary elements. Document which visual elements trigger card appearances and replicate these patterns across similar content pieces.

Measure Engagement Metrics Beyond Traditional CTR

Visual search engagement requires tracking metrics beyond standard click-through rates because users interact differently with image-based results. Monitor dwell time on pages accessed through visual searches, which averages 2.3 minutes longer than text-based search visits according to recent analytics data. Track scroll depth on pages with optimized visual content to identify which image placements generate the strongest user engagement. Heat mapping tools reveal that users spend 47% more time viewing pages with properly captioned images positioned near relevant text blocks.

Set Up Conversion Tracking for Visual Traffic

Set up conversion tracking for users who arrive through visual search paths, as these visitors convert at rates 23% higher than traditional organic search traffic when the visual content matches their search intent accurately. Create separate tracking codes for visual search traffic to isolate performance data from other traffic sources. Test different visual content formats (infographics, product shots, step-by-step images) to identify which formats drive the highest conversion rates for your specific audience and industry vertical using A/B testing tools.

Final Thoughts

Google AI Mode visual and live search demands a complete shift from traditional image SEO practices. Your images need recognition-friendly captions positioned directly beneath each visual element, fact boxes adjacent to key graphics, and before-after frameworks that guide AI interpretation. Test your visual content with image-led prompts regularly to verify link card appearances and track engagement metrics beyond standard click-through rates.

The essential image SEO checklist includes clear ALT text that describes exact visual content, EXIF data removal to reduce file sizes, and optimal dimensions of 1200×630 pixels for social sharing. Lazy-load implementation speeds up page loads while WebP format conversion improves compression rates (visual search traffic converts 23% higher than traditional organic search when properly optimized). These technical elements work together to maximize your content’s visibility in AI-powered search results.

Success with optimize for Google AI Mode visual and live search requires consistent testing and refinement of your visual content strategy. We at Emplibot help businesses streamline their content creation process while maintaining the visual optimization standards that AI Mode demands. Emplibot automates your WordPress blog content creation and social media distribution, handling everything from keyword research to SEO optimization while producing visual content that performs well across platforms.

![The June 2025 Core Update [Checklist Inside]](https://wp.emplibot.com/wp-content/uploads/emplibot/june-2025-core-update-hero-1757747315-768x456.jpeg)

![Chrome Keeps Third Party Cookies [What To Do Now]](https://wp.emplibot.com/wp-content/uploads/emplibot/chrome-keeps-third-party-cookies-hero-1757661018-768x456.jpeg)