At Emplibot, we’ve seen firsthand how data quality can make or break AI projects.

Data quality in AI is not just a buzzword; it’s the foundation of reliable and effective machine learning models.

Poor data quality can lead to biased results, inaccurate predictions, and wasted resources.

In this post, we’ll explore why data quality matters in AI and how you can improve it for better outcomes.

Contents

ToggleWhy Data Quality Is Critical for AI

The Foundation of Effective AI Systems

Data quality in AI refers to the accuracy, completeness, consistency, and relevance of the information used to train and operate machine learning models. High-quality data forms the foundation of effective AI data analytics, directly impacting their performance and reliability.

The Direct Link Between Data and AI Performance

A robust data governance and data quality strategy is the prerequisite to AI business success. The early adopters of AI, ML, and Gen-AI were often faced with challenges due to poor data quality, highlighting the strong correlation between data quality and AI success.

Poor data quality can trigger a domino effect of issues. For example, a financial services company struggled with their AI-powered fraud detection system. The model incorrectly flagged legitimate transactions as fraudulent, causing customer frustration. An investigation uncovered that their training data contained outdated transaction patterns, which led to inaccurate predictions.

The Hidden Costs of Poor Data Quality

The consequences of poor data quality extend far beyond model inaccuracy. Poor data quality costs organizations at least $12.9 million a year on average, according to Gartner research from 2020. This figure includes both direct costs like wasted resources and indirect costs such as missed opportunities and damaged reputation.

A healthcare provider using AI for patient diagnosis faced severe consequences when their model made incorrect recommendations due to inconsistent data formatting. This led to misdiagnoses, potentially endangering patient lives and exposing the organization to legal risks.

Ensuring Data Quality: An Ongoing Process

Maintaining high data quality requires continuous effort. We recommend the implementation of automated data quality checks at every stage of your AI pipeline. Tools like Great Expectations or Deequ can automate these checks, ensuring that only high-quality data enters your system.

Additionally, the involvement of domain experts in data curation can significantly improve data quality. These experts can identify subtle inconsistencies or biases that automated systems might miss. A retail company improved their recommendation engine’s accuracy by 25% after involving product managers in the data curation process.

The Role of Data Governance

Effective data governance policies play a crucial role in maintaining data quality. These policies should outline clear standards for data collection, storage, and usage. They should also define roles and responsibilities for data management within the organization.

Organizations that implement robust data governance frameworks often see improvements in data quality and, consequently, in their AI initiatives. For instance, a manufacturing company reduced errors in their predictive maintenance models by 40% after implementing a comprehensive data governance strategy.

As we move forward, let’s explore some common data quality issues that organizations face when implementing AI solutions, and how these issues can impact the effectiveness of AI models.

Common Data Quality Issues in AI

Incomplete or Missing Data

Incomplete or missing data plagues many AI projects. This issue can lead to skewed predictions in various AI applications, such as recommendation engines.

To combat this issue, organizations can implement a data completeness score for each record. Any record falling below a certain completeness threshold should be flagged for review. This simple step can improve model accuracy significantly.

Data Inconsistency and Contradictions

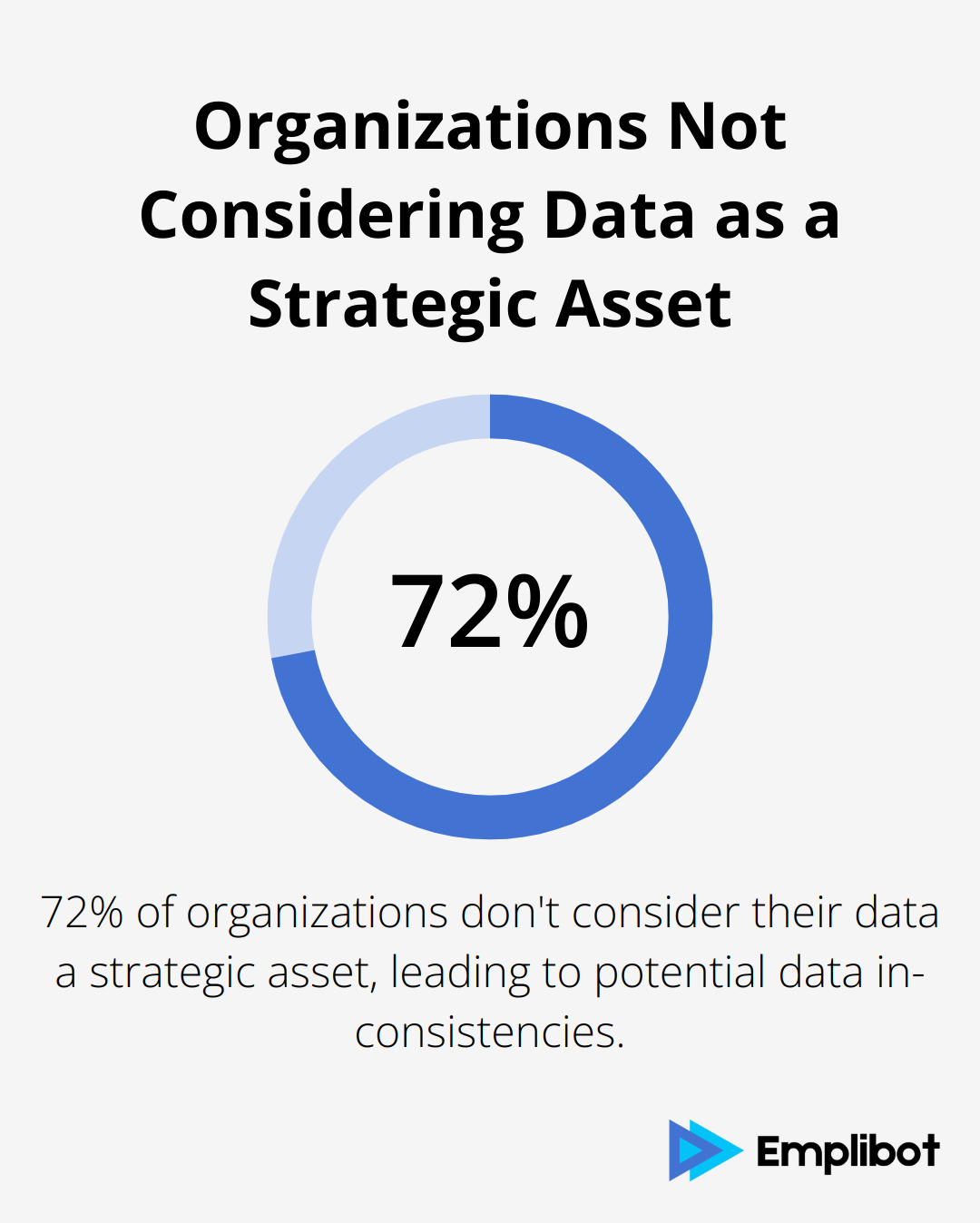

Inconsistent data formats and contradictory information present major hurdles for AI systems. A recent study found that 72% of organizations don’t consider their data a strategic asset, which can lead to data inconsistencies.

For example, a multinational company faced challenges when date formats varied across different regions. This inconsistency led to errors in time-sensitive predictions. The solution involved implementing a standardized date format across all data sources and using automated data validation tools to catch inconsistencies before they entered the AI pipeline.

Biased or Unrepresentative Data

Bias in data can lead to unfair or discriminatory AI outcomes. Studies have uncovered significant disparities in error rates for facial recognition systems based on skin tone and gender.

To address this issue, organizations should perform thorough analyses of their training data to identify potential biases. Techniques like stratified sampling ensure datasets represent diverse populations. This approach can help reduce bias in various AI applications, such as job recommendation systems.

Outdated or Irrelevant Information

Using outdated or irrelevant data can lead to inaccurate predictions in AI models. The impact of poor data quality on the economy underscores the importance of data freshness.

Organizations can tackle this problem by implementing a data freshness metric. Any data older than a predetermined threshold (which varies depending on the industry and use case) should be automatically flagged for review or update. This approach can significantly improve the accuracy of AI models, such as those used in fraud detection.

These common data quality issues can significantly impact the performance and reliability of AI systems. Addressing them requires a combination of technological solutions and human expertise. In the next section, we’ll explore effective strategies for improving data quality and ensuring AI models are built on a solid foundation of reliable, accurate, and relevant data.

How to Improve Data Quality for AI

At Emplibot, we know that improving data quality for AI requires a comprehensive strategy. Here are practical steps you can take to enhance your data quality and boost your AI performance.

Implement Rigorous Data Cleaning Processes

Start by establishing a robust data cleaning pipeline. Use tools like Python’s Pandas library or R’s tidyverse package to handle missing values, remove duplicates, and correct inconsistencies. A financial services company reduced errors in their fraud detection model by 30% after implementing automated data cleaning processes.

Don’t overlook outlier detection. Techniques like the Interquartile Range (IQR) method or machine learning algorithms like Isolation Forests can help identify and handle anomalies that could skew your AI models. By multiplying the IQR by a chosen factor, outliers can be identified as data points that fall beyond a certain range from the upper and lower quartiles.

Establish Clear Data Governance Policies

Create a data governance framework that defines roles, responsibilities, and processes for data management. This should include guidelines for data collection, storage, and usage. A telecommunications company saw a 25% improvement in customer churn prediction accuracy after implementing a comprehensive data governance policy.

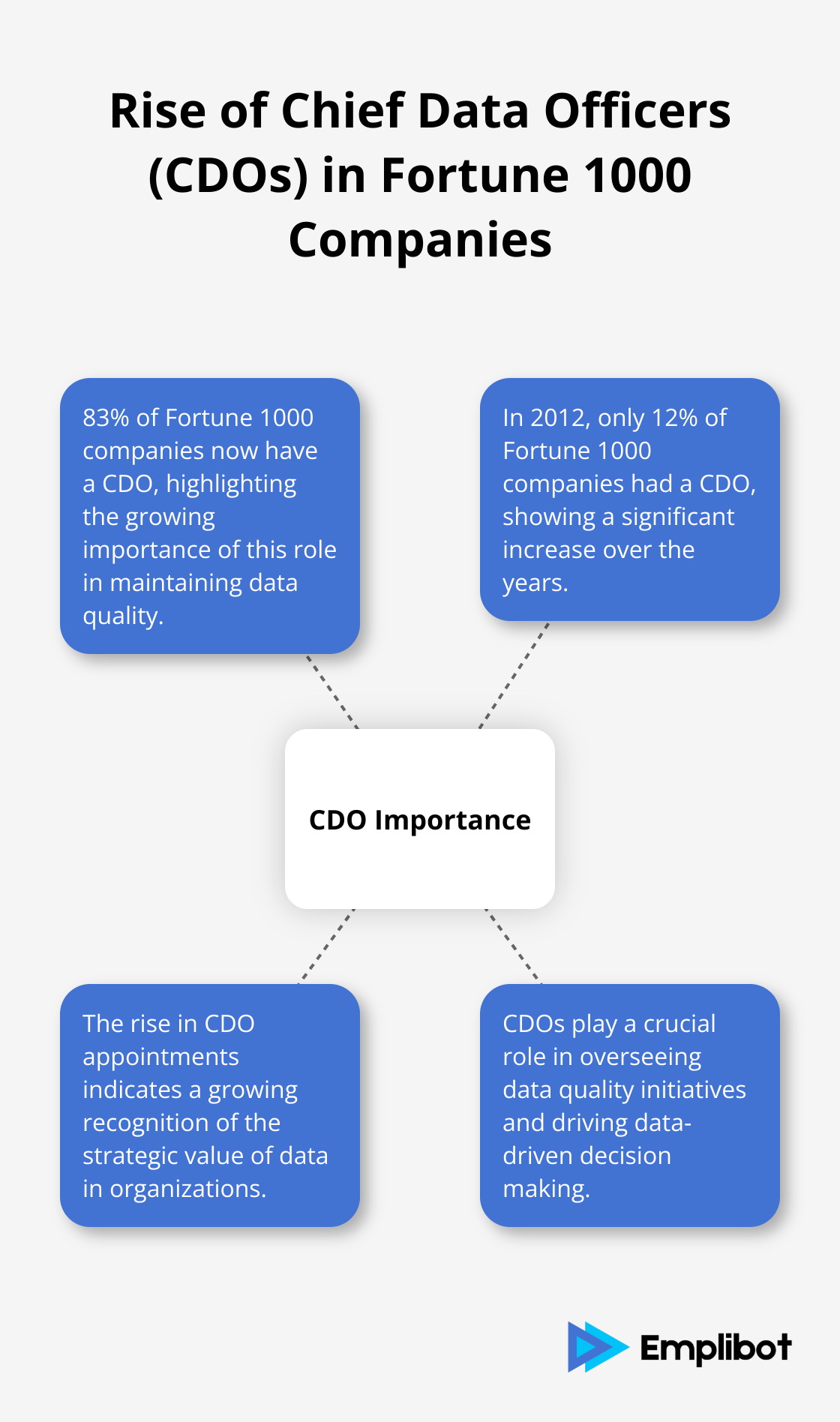

Consider appointing a Chief Data Officer (CDO) to oversee data quality initiatives. According to NewVantage Partners, 83% of Fortune 1000 companies now have a CDO, up from just 12% in 2012, highlighting the growing importance of this role in maintaining data quality.

Set Up Continuous Data Monitoring

Implement automated data quality checks throughout your data pipeline. Tools like Apache Nifi or Talend can help you set up real-time data quality monitoring. A retail company detected and corrected data inconsistencies 40% faster after implementing continuous monitoring, leading to more accurate inventory predictions.

Don’t rely solely on automated checks. Regular manual audits by domain experts can catch subtle issues that automated systems might miss. Schedule quarterly data quality reviews with your team to ensure ongoing data integrity.

Leverage Domain Expertise in Data Curation

Involve subject matter experts in your data curation process. Their insights can prove invaluable in identifying relevant features and spotting domain-specific anomalies. A healthcare provider improved the accuracy of their diagnostic AI by 15% after involving medical professionals in data curation.

Consider implementing a data stewardship program where domain experts take responsibility for the quality of data in their respective areas. This distributed approach can lead to more accurate and contextually relevant data for your AI models.

Improving data quality is an ongoing process. It requires a combination of technological solutions and human expertise (both of which are essential for building more accurate, reliable, and effective AI systems).

Final Thoughts

Data quality in AI forms the foundation of successful artificial intelligence initiatives. Organizations that prioritize high-quality data experience improved decision-making, increased operational efficiency, and enhanced customer satisfaction. These companies position themselves to adapt to changing market conditions and capitalize on new opportunities as they arise.

The competitive advantage gained through superior data quality will only grow as AI continues to shape industries across the board. Companies that neglect data quality risk falling behind, while those that embrace it will lead in innovation and performance. We at Emplibot understand the challenges of maintaining high-quality data for AI applications.

Our AI-powered content creation platform automates the entire process from keyword research to content distribution (helping businesses maintain a consistent online presence with high-quality, engaging content). The time to prioritize data quality in AI initiatives is now. You should assess your current data practices, implement robust data governance policies, and invest in tools and processes that support ongoing data quality improvement.

![AI-Powered Market Analysis [2025 Guide]](https://wp.emplibot.com/wp-content/uploads/emplibot/ai-powered-market-analysis-1754032060-768x456.jpeg)

![AI Marketing: Lead Generation [Guide]](https://wp.emplibot.com/wp-content/uploads/emplibot/ai-marketing-lead-generation-1753859292-768x456.jpeg)

![AI Marketing: Predictive Lead Scoring [Guide]](https://wp.emplibot.com/wp-content/uploads/emplibot/predictive-lead-scoring-1753772819-768x456.jpeg)

![Google Autocomplete for Keyword Research [Guide]](https://wp.emplibot.com/wp-content/uploads/emplibot/google-autocomplete-for-keyword-research-1753600089-768x456.jpeg)