NVIDIA, the tech giant known for its graphics processing units, is making waves in the AI world with its focus on Small Language Models (SLMs). At Emplibot, we’re closely watching this shift towards more compact and efficient AI solutions.

NVIDIA’s bet on SLMs promises to revolutionize how we approach artificial intelligence, offering advantages that could reshape the industry. Let’s explore why these smaller models might just be the future of agentic AI.

Contents

ToggleWhy NVIDIA Bets Big on Small Language Models

The Efficiency Revolution

NVIDIA’s recent pivot towards Small Language Models (SLMs) marks a significant shift in the AI landscape. The tech giant, known for its powerful GPUs, now champions these compact AI models as the future of agentic AI. This change stems from the impressive efficiency of SLMs.

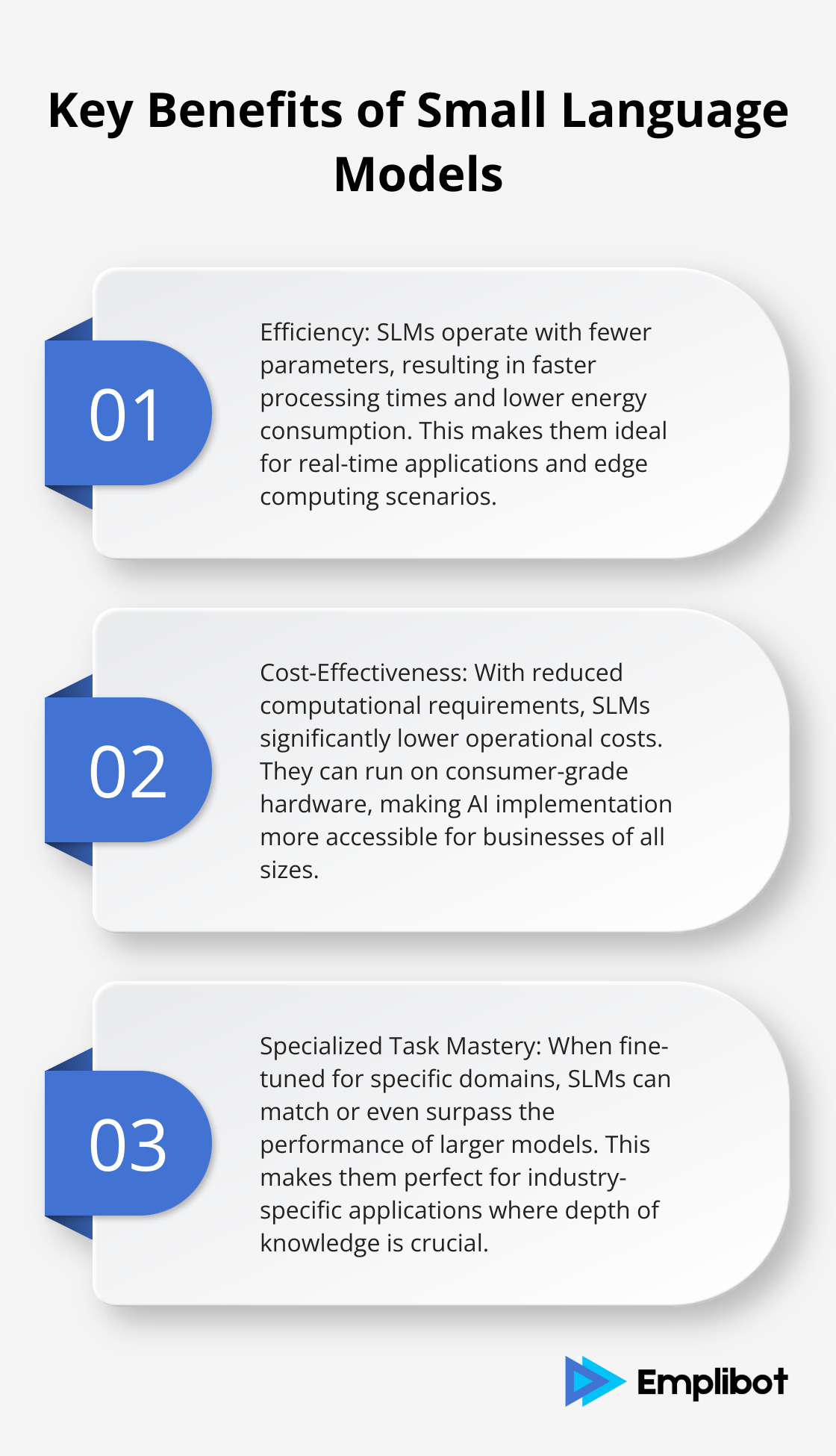

Unlike their larger counterparts, SLMs perform specific tasks with remarkable accuracy while using far fewer computational resources. This efficiency translates to faster processing times and lower energy consumption (crucial factors in today’s AI-driven world).

SLMs vs. Large Language Models: A Stark Contrast

When comparing SLMs to large language models (LLMs), the differences stand out. While LLMs like GPT-3 boast billions of parameters, SLMs operate with a fraction of that. For instance, NVIDIA’s Nemotron-H family delivers 30B LLM accuracy at a fraction of inference costs. This allows businesses to deploy AI solutions without the need for massive computational infrastructure.

NVIDIA’s SLM Innovation Pipeline

NVIDIA’s research and development in SLM technology pushes boundaries. They’ve developed a six-step conversion algorithm that includes:

- Data collection

- Task clustering

- Model selection

- Fine-tuning

- Deployment

- Continuous improvement

This approach allows for the creation of highly specialized AI agents that can outperform larger models in specific domains.

Real-World Applications of SLMs

The practical implications of NVIDIA’s SLM focus reach far and wide. These models excel in edge computing scenarios, where processing power is limited. They also shine in creating specialized AI assistants for industries like healthcare, finance, and customer service.

NVIDIA’s bet on SLMs isn’t just about technology – it’s about making AI more accessible and practical for businesses of all sizes. As we move forward, the potential for these compact yet powerful models continues to grow, opening new possibilities for AI integration across various sectors.

Why Are Small Language Models Game-Changers?

Lightning-Fast Responses

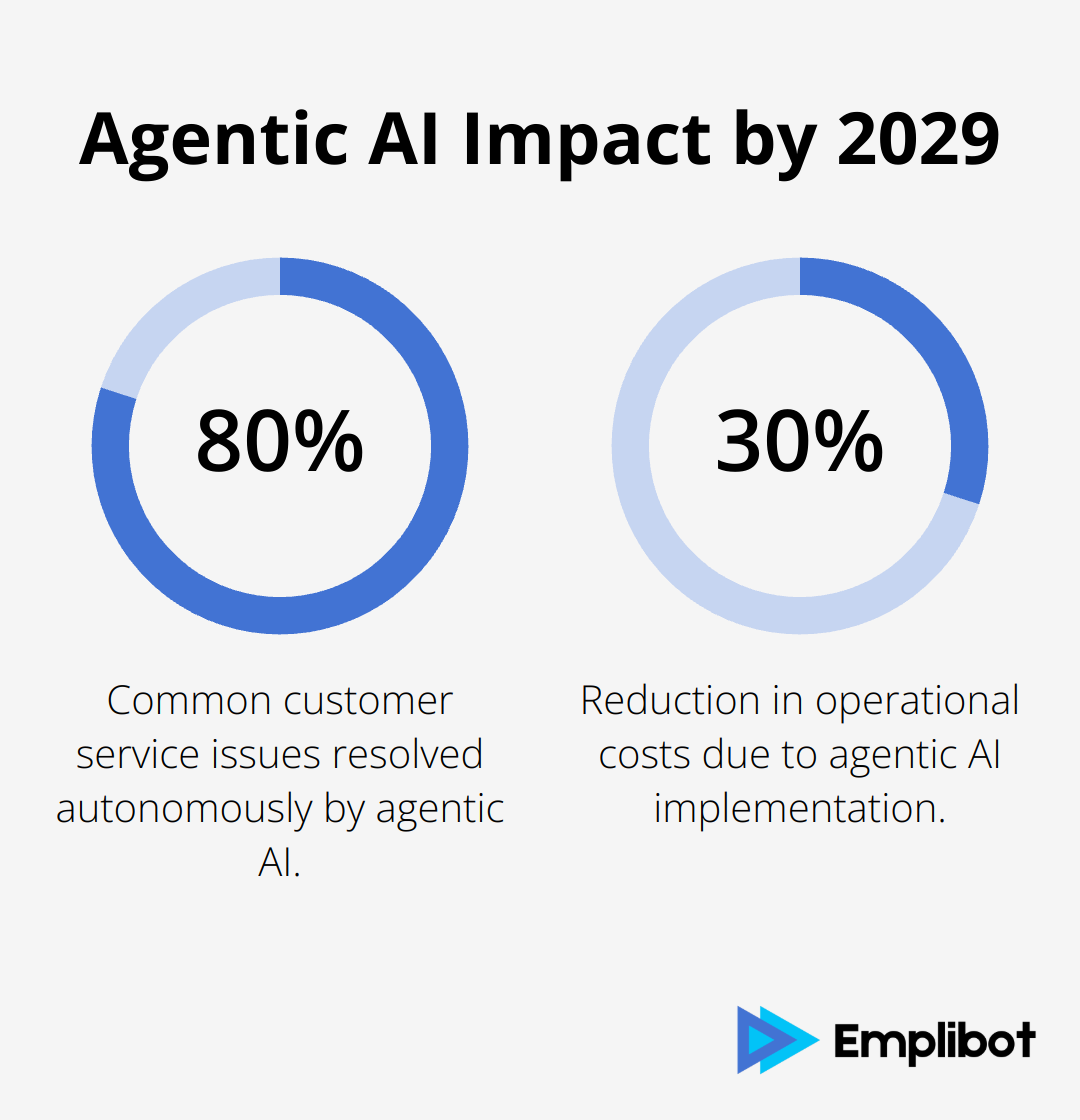

Small Language Models (SLMs) revolutionize AI with their exceptional speed. Their compact design allows for near-instantaneous processing, a critical advantage in real-time applications. Customer service chatbots, for example, benefit greatly from this rapid response capability. Gartner predicts that by 2029, agentic AI will autonomously resolve 80% of common customer service issues without human intervention, leading to a 30% reduction in operational costs. This forecast underscores the transformative potential of SLMs in enhancing user experiences across various industries.

Minimal Resource Requirements

SLMs stand out for their efficiency in resource utilization. Unlike their larger counterparts that demand extensive GPU power, SLMs often operate smoothly on consumer-grade hardware. This accessibility democratizes AI technology, opening doors for smaller businesses and developers who may lack high-end infrastructure. Microsoft’s Phi-3 model exemplifies this efficiency, achieving better performance than OpenAI o1-mini and DeepSeek-R1-Distill-Llama-70B at most tasks despite its significantly smaller size.

Cost-Effective AI Implementation

The operational costs associated with SLMs significantly undercut those of large language models. This cost-effectiveness stems from reduced energy consumption, lower hardware requirements, and faster training times. This stark price difference positions SLMs as an attractive option for businesses aiming to implement AI solutions without straining their budgets.

Specialized Task Mastery

While SLMs may not match the broad capabilities of larger models, they excel in focused domains. When fine-tuned for specific tasks, these models can achieve (and sometimes surpass) the performance levels of their larger counterparts. This specialized prowess makes SLMs ideal for industry-specific applications where depth of knowledge in a particular area trumps general knowledge breadth.

As we explore the practical applications of SLMs, we’ll uncover how these compact powerhouses are reshaping various sectors and enabling new possibilities in AI integration.

Where Are SLMs Making a Real Impact?

Revolutionizing Edge Computing

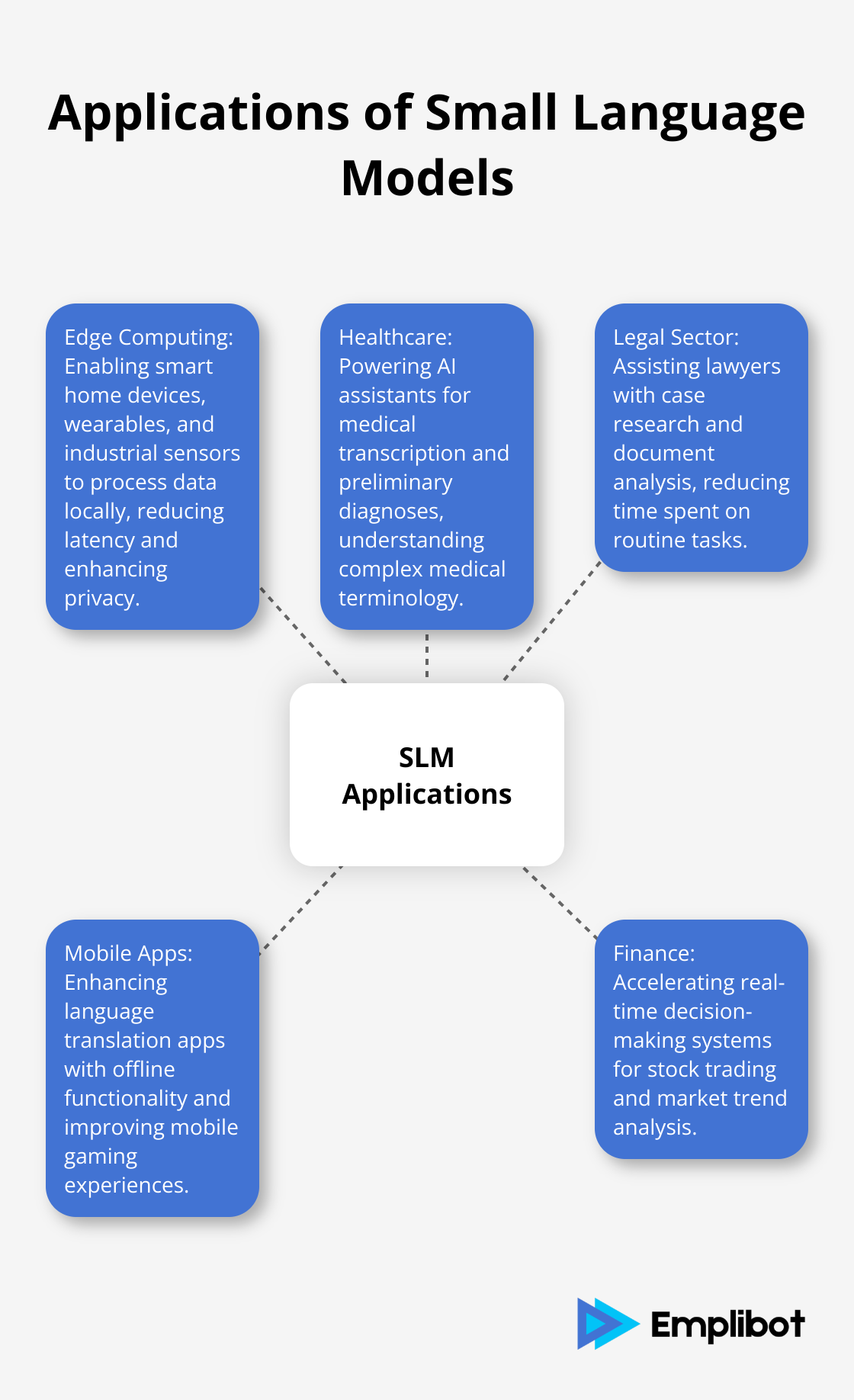

Small Language Models (SLMs) transform edge computing and Internet of Things (IoT) devices. NVIDIA’s solutions in enterprise edge, embedded edge, and industrial edge are turning this possibility into real-world results. These models enable smart home devices, wearables, and industrial sensors to process data locally, which reduces latency and enhances privacy. A smart thermostat equipped with an SLM can analyze usage patterns and adjust temperatures in real-time without sending data to the cloud. This local processing speeds up response times and addresses data privacy concerns (a critical factor in today’s privacy-conscious market).

Powering Industry-Specific AI Assistants

SLMs offer tailored solutions in specialized industries. These models have fewer parameters and are fine-tuned on a subset of data for specific use cases. Healthcare providers use SLM-powered assistants for tasks like medical transcription and preliminary diagnoses. These models (trained on specific medical datasets) understand complex medical terminology and provide accurate, context-aware responses. In the legal sector, SLMs assist lawyers with case research and document analysis, which significantly reduces the time spent on routine tasks.

Enhancing Mobile Experiences

The mobile app landscape benefits from SLMs’ ability to run efficiently on smartphones. Language translation apps now offer offline functionality, which makes them invaluable for travelers in areas with limited internet connectivity. Mobile gaming experiences improve with SLMs, which enable more sophisticated in-game AI opponents and dynamic storylines that adapt to player choices in real-time.

Accelerating Decision-Making Systems

In fast-paced environments like stock trading and cybersecurity, SLMs prove invaluable for real-time decision-making systems. These models quickly analyze market trends or detect anomalies in network traffic, which allows for immediate responses to potential threats or opportunities. The speed and efficiency of SLMs in these scenarios can mean the difference between a successful trade or a thwarted cyber attack.

Optimizing Content Creation and Marketing

SLMs play a significant role in content creation and marketing automation. These models assist in generating targeted content, analyzing market trends, and personalizing customer communications. For businesses looking to streamline their content marketing efforts, AI-powered solutions like Emplibot offer comprehensive automation for WordPress blogs and social media platforms.

Final Thoughts

NVIDIA’s commitment to Small Language Models (SLMs) signals a transformative shift in the AI landscape. These compact powerhouses offer an impressive combination of efficiency, speed, and cost-effectiveness that reshapes how businesses approach AI implementation. NVIDIA SLMs reduce computational requirements and energy consumption while they maintain high performance in specialized tasks.

The implications of NVIDIA’s SLM focus are profound for businesses and developers. It unlocks new possibilities for specialized AI assistants, enhanced mobile applications, and improved real-time decision-making systems. The reduced costs and faster development cycles associated with SLMs make AI integration more feasible for organizations of all sizes (potentially accelerating innovation across sectors).

As the AI landscape evolves, tools like Emplibot lead the charge in leveraging AI for marketing automation. Emplibot uses AI agents to streamline content creation, distribution, and optimization for businesses. It exemplifies how AI can enhance marketing strategies and drive business growth through automated WordPress blogs and social media management.

![SEO Content Creation [Tips and Techniques]](https://wp.emplibot.com/wp-content/uploads/emplibot/seo-content-creation-hero-1756278494-768x456.jpeg)